Whenever you glance down to read an instrument cluster, look at a map, or check to see who just texted you, in that brief moment that your eyes are off the road, your vehicle may have traveled tens of meters. In those instances, you are, for all practical purposes, driving blind. Consequently, thousands of people die and hundreds of thousands more are injured each year in crashes involving distracted driving. It follows then that eliminating a good portion of such distractions could likewise eliminate a corresponding number of accidents.

That’s the idea behind the Head-up Display (HUD). At least in part. To this end, the HUD projects information exactly where it is needed: directly in the driver’s line of sight. Through a virtual image “floating” just over the hood, drivers are served a stream of important information including speed, warning signals, even indicator arrows for navigation—all without the driver having to avert his gaze from the road ahead.

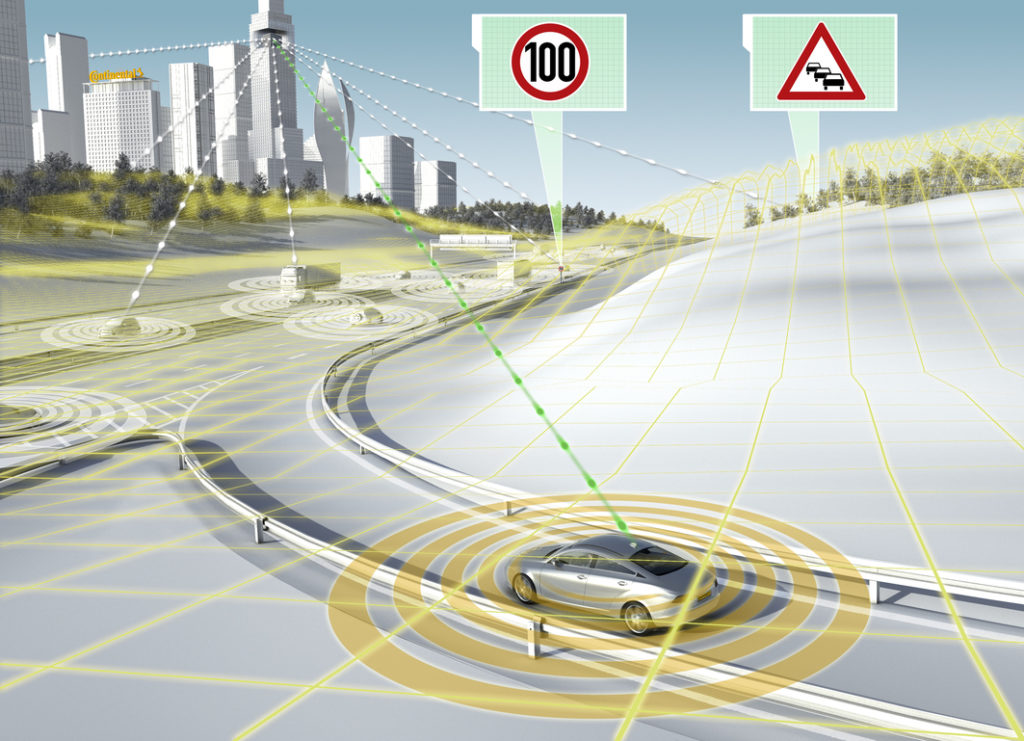

Realizing such a human-machine-interface (HMI) experience requires a holistic engineering approach, and that’s exactly what Continental has applied. The company’s augmented reality-enhanced HUD system is enabled by, and integrates, a multitude of technologies that span myriad sensors (camera, LiDAR, biometric, ultrasonic), next-generation HUD technology (through its partnership with DigiLens), the application of machine learning (enabling autonomous functions), GPS positioning—and even cloud connectivity for serving up digital map data, current traffic conditions, and other vital information.

As you might imagine, the rapidly escalating volume of data coming into a vehicle now also requires commensurate instrumentation and processing power, as well as tight integration with ADAS systems, in order to digest and deliver that information in real time to the driver in a cohesive and non-distracting way. Let’s focus in a just a few of those technologies.

AR-HUD

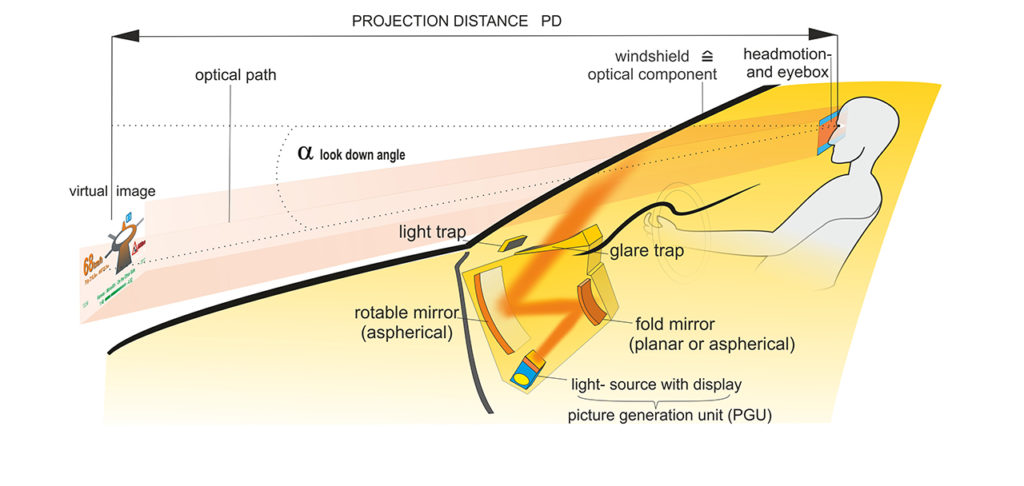

Continental has entered into a strategic partnership with DigiLens, a developer of ultrathin augmented reality “holographic” HUD systems. We’ll explore their technology in depth in a future issue, but one of the breakthroughs they’ve achieved is having shrunk the unit by a factor of three, compared to where the state of the art has been to date, freeing up extremely valuable real estate within the vehicle. Looking under the hood, the HUD unit’s graphical elements are generated with the help of a digital micromirror chip (DMD)—the same technology used in digital cinema projectors. The core of the picture generation unit (PGU) is an optical semiconductor comprising a matrix of several hundred thousand tiny mirrors, which can be tilted individually ±10-12° into reflecting (on, or bright) and non-reflecting (off, or dark) states, via electrostatic fields. Each mirror represents one or more pixels in the projected image, with the number of mirrors corresponding to the image resolution.

To generate a color image, the micromirror matrix is lit by a set of three LEDs—red, green, blue—firing in rapid succession and in a time-sequential manner. The human eye actually “averages” all three color frames, creating the impression of a fully and continuously colored picture. The image is then projected onto the windshield, as opposed to a screen.

The HUD, though, is essentially the output device in this system. It takes its cues from Continental’s AR-Creator, the Grand Central Station for all the signals data coming into the vehicle.

AR-Creator

Jennifer Wahnschaff, Vice President, Instrumentation and Driver HMI for the Americas, explained the AR-Creator to us this way: “The AR-Creator takes all of the information coming from driver assistance systems, all of the sensors that are in the vehicle for adaptive safety systems—cameras, LiDAR, radar, wheel speed sensors—along with GPS, digital map data, and other sources and brings them all together to deliver the right information to the driver at the right time. Whether it’s alerting a drifting driver with a lane departure warning, or detecting that the driver is getting drowsy, or whether it’s providing information for navigation, or, if the vehicle has adaptive cruise control and is monitoring the distance to the car ahead, all of these things are crucial to safety, and that’s really what AR-Creator is about.”

Wahnschaff adds that the system also provides a handshake to driver. “With all these new technologies that are coming into safety systems, we have to have a way of communicating with the driver and building a level of trust so that the driver understands what the vehicle knows and what the vehicle doesn’t know. And by providing this in an individual way, it not only helps the driver to become more secure, but also more trusting in the technology.”

The system’s look-ahead capability is especially interesting. While the AR-HUD is displaying information only about 7.5 meters in front of the driver’s view, the vehicle’s sensors are projecting out much further.

eHorizon

If you’re familiar with the popular traffic navigating app, Waze, Continental’s eHorizon takes the human element out of the equation, relying instead on information from the vehicle’s sensors and the infrastructure on and around the roadway. In the future, as V2V technologies begin to proliferate, eHorizon will exploit them as well to provide even better driver updates.

About V2V, Wahnschaff notes the availability of the communications infrastructure in Europe, where traffic information is brought in over the radio system. “That traffic information could also be integrated into the navigation system and propose a different route for the driver to get around a breakdown, or construction, or other situation.”

As to the balance between cloud-based and local processing, there will always be trade-offs to consider in how much of the processing burden is going to be placed on the OEM and the vehicle itself. Continental’s approach is to support both models, but Wahnschaff is quick to point out that as more and more information is being processed—particularly GPS and maps data—e-Horizon looks to a healthy degree of connectivity. “Our philosophy,” Wahnschaff says, “is we adapt to the environment that we have in the vehicle, making the information increasingly accurate and sophisticated as infrastructure, sensors, and connectivity allow.”

eHorizon integrates topographical and digital map data with sensor data, namely GPS receivers, for predictive control of vehicle systems. Future events, such as the uphill incline after the next corner, are exploited at an early stage in order to optimize the vehicle’s response. eHorizon interprets map and sensor data and automatically adapts the engine and transmission management.

And, of course, when 5G is deployed in a few short years, it is going to change everything, to which Wahnschaff muses, “But then we’ll be waiting for 6G and 7G and 8G!”

Projecting Navigation

Perhaps the most elegant manifestation of Continental’s AR HUD is its ability to project navigation graphics apparently directly onto the surface of the road ahead.

Click the image above to watch AR-Creator HUD navigation in action, as seen through the windshield of Toyota’s Concept-i.

This is no small feat. How does one go about rendering a 3D element in the 3D world that is dynamically fixed to the roadway? Continental has managed to isolate this function, independent of the movements of the vehicle. And on top of that, it, too, must look ahead.

Continental’s Stefan Wentzel, who showed us the system at CES 2017, explains, “In this system we are integrating sensors and a camera that normally supports lane departure detection and warning, or recognition of obstacles, pedestrians, bicyclists, etc. The AR-Creator combines information from the camera’s image stream and the maps data. One of the challenges was that the camera only looks 60 to 70 meters ahead, but with the AR HUD, we have to provide a picture up to 200 meters down the road. So we extend the view of the camera in a virtual way. The other challenge we met is that GPS data is not always as precise as needed; there is always a few meters difference from where it says you are and where you actually are. So we correct the GPS data by overlaying the streaming camera image. You have to put the two together. And now that we know where we really are, we can extend the field of view.”

Which brings us to the last hurdle in this realizing this remarkable technology. Continental’s engineers had to find a way to calculate the 3D objects within the driver’s view in such a way that they could actually forecast them, that is, before they become visible. Wentzel continues, “For example, as the sensors measure the roadway, if you calculate the new objects as they appear, the car has already driven some part of the way. That means you’ve got to calculate well into the future, extrapolating the data to forecast what’s down the road, relying again on this combination of maps, GPS, and camera data, because otherwise it’s too late.”

Taken together, the elements comprising AR-HUD yield a whole that is greater than the sum of its parts. And to fully appreciate that, it’s got to be experienced. Come 2020, when this technology gets rolled out into production vehicles, you just might be able to. Jennifer Wahnschaff sums it up: “I’m really excited about this technology. I enjoy driving the test vehicles. When you’re in a new environment it’s great to have as much information as possible for figuring out where you need to go. And reducing driver distraction by presenting this information in such a simple way, well, it is just so beneficial.”